k8s调度器插件开发教程

By admin

- 13 minutes read - 2748 words上一篇 《k8s调度器 kube-scheduler 源码解析》 大概介绍一调度器的内容,提到扩展点的插件这个概念,下面我们看看如何开发一个自定义调度器。

本文源码托管在 https://github.com/cfanbo/sample-scheduler。

插件机制

在Kubernetes调度器中,共有两种插件机制,分别为 in-tree 和 out-of-tree。

- In-tree插件(内建插件):这些插件是作为Kubernetes核心组件的一部分直接编译和交付的。它们与Kubernetes的源代码一起维护,并与Kubernetes版本保持同步。这些插件以静态库形式打包到kube-scheduler二进制文件中,因此在使用时不需要单独安装和配置。一些常见的in-tree插件包括默认的调度算法、Packed Scheduling等。

- Out-of-tree插件(外部插件):这些插件是作为独立项目开发和维护的,它们与Kubernetes核心代码分开,并且可以单独部署和更新。本质上,out-of-tree插件是基于Kubernetes的调度器扩展点进行开发的。这些插件以独立的二进制文件形式存在,并通过自定义的方式与kube-scheduler进行集成。为了使用out-of-tree插件,您需要单独安装和配置它们,并在kube-scheduler的配置中指定它们。

可以看到 in-tree 插件与Kubernetes的核心代码一起进行维护和发展,而 out-of-tree插件可以单独开发并out-of-tree插件以独立的二进制文件部署。因此 out-of-tree插件具有更大的灵活性,可以根据需求进行自定义和扩展,而 in-tree 插件受限于Kubernetes核心代码的功能和限制。

对于版本升级in-tree插件与Kubernetes版本保持同步,而out-of-tree插件可以单独进行版本升级或兼容。

总的来说,in-tree 插件是Kubernetes的一部分,可以直接使用和部署,而 out-of-tree 插件则提供了更多的灵活性和定制化能力,但需要单独安装和配置。

一般开发都是采用out-of-tree 这各机制。

扩展点

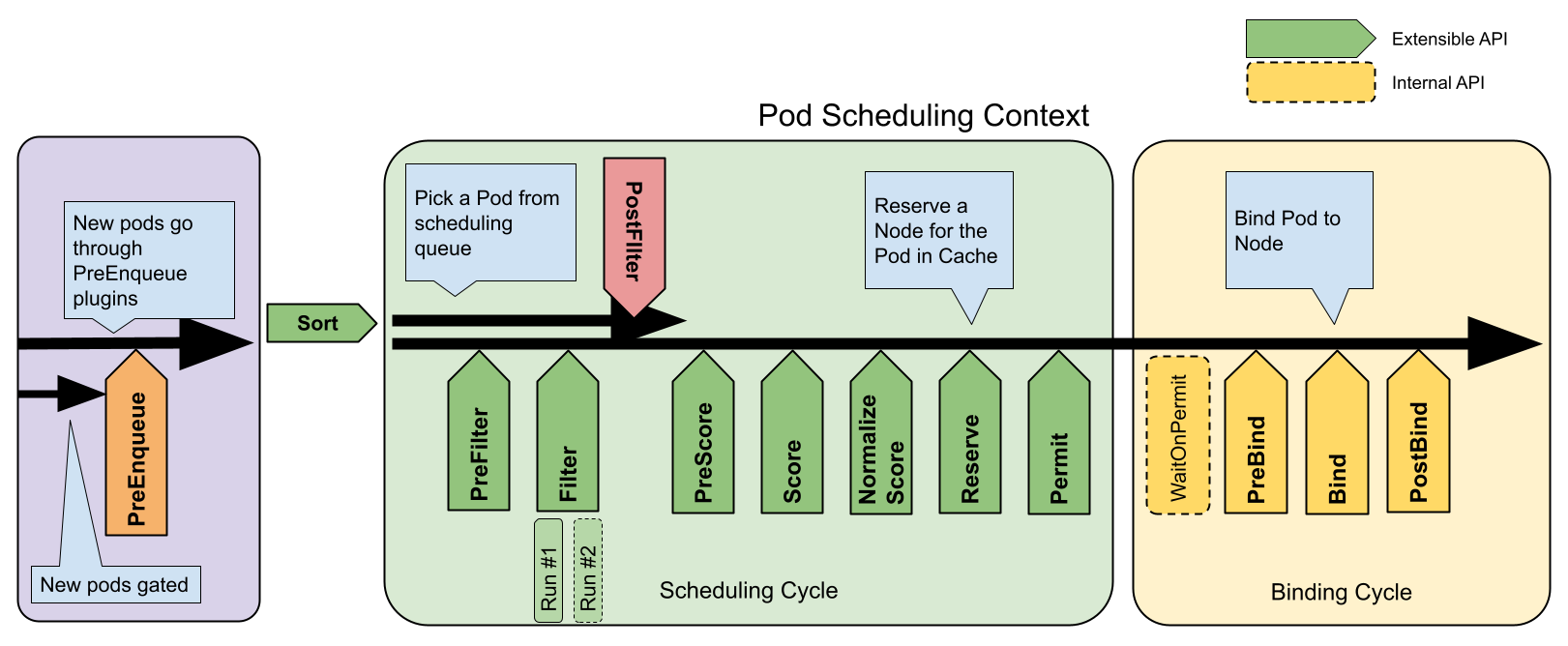

下图显示了一个 Pod 的调度上下文以及调度框架公开的扩展点。

一个插件可以在多个扩展点处注册执行,以执行更复杂或有状态的任务。

对于每个扩展点的介绍,可参考上面给出的官方文档,这里不再做介绍。

我们下面开发的是一个 Pod Scheduling Context 部分的 Filter调度器插件,插件的功能非常的简单,就是检查一个 Node 节点是否存在 cpu=true标签,如果存在此标签则可以节点有效,否则节点视为无效,不参与Pod调度。

插件实现

要实现一个调度器插件必须满足两个条件:

- 必须实现对应扩展点插件接口

- 将此插件在调度框架中进行注册。

不同扩展点可以启用不同的插件。

插件实现

每个扩展点的插件都必须要实现其相应的接口,所有的接口定义在 https://github.com/kubernetes/kubernetes/blob/v1.27.3/pkg/scheduler/framework/interface.go。

// Plugin is the parent type for all the scheduling framework plugins.

type Plugin interface {

Name() string

}

// FilterPlugin is an interface for Filter plugins. These plugins are called at the

// filter extension point for filtering out hosts that cannot run a pod.

// This concept used to be called 'predicate' in the original scheduler.

// These plugins should return "Success", "Unschedulable" or "Error" in Status.code.

// However, the scheduler accepts other valid codes as well.

// Anything other than "Success" will lead to exclusion of the given host from

// running the pod.

// 这个是我们要实现的插件

type FilterPlugin interface {

Plugin

// Filter is called by the scheduling framework.

// All FilterPlugins should return "Success" to declare that

// the given node fits the pod. If Filter doesn't return "Success",

// it will return "Unschedulable", "UnschedulableAndUnresolvable" or "Error".

// For the node being evaluated, Filter plugins should look at the passed

// nodeInfo reference for this particular node's information (e.g., pods

// considered to be running on the node) instead of looking it up in the

// NodeInfoSnapshot because we don't guarantee that they will be the same.

// For example, during preemption, we may pass a copy of the original

// nodeInfo object that has some pods removed from it to evaluate the

// possibility of preempting them to schedule the target pod.

Filter(ctx context.Context, state *CycleState, pod *v1.Pod, nodeInfo *NodeInfo) *Status

}

// PreEnqueuePlugin is an interface that must be implemented by "PreEnqueue" plugins.

// These plugins are called prior to adding Pods to activeQ.

// Note: an preEnqueue plugin is expected to be lightweight and efficient, so it's not expected to

// involve expensive calls like accessing external endpoints; otherwise it'd block other

// Pods' enqueuing in event handlers.

type PreEnqueuePlugin interface {

Plugin

// PreEnqueue is called prior to adding Pods to activeQ.

PreEnqueue(ctx context.Context, p *v1.Pod) *Status

}

// LessFunc is the function to sort pod info

type LessFunc func(podInfo1, podInfo2 *QueuedPodInfo) bool

// QueueSortPlugin is an interface that must be implemented by "QueueSort" plugins.

// These plugins are used to sort pods in the scheduling queue. Only one queue sort

// plugin may be enabled at a time.

type QueueSortPlugin interface {

Plugin

// Less are used to sort pods in the scheduling queue.

Less(*QueuedPodInfo, *QueuedPodInfo) bool

}

...

要实现 PreEnqueue扩展点的插件必须实现 PreEnqueuePlugin接口,而如何实现QueueSort扩展点插件的话,同需要实现 QueueSortPlugin接口,在这里实现 Filter 插件接口。

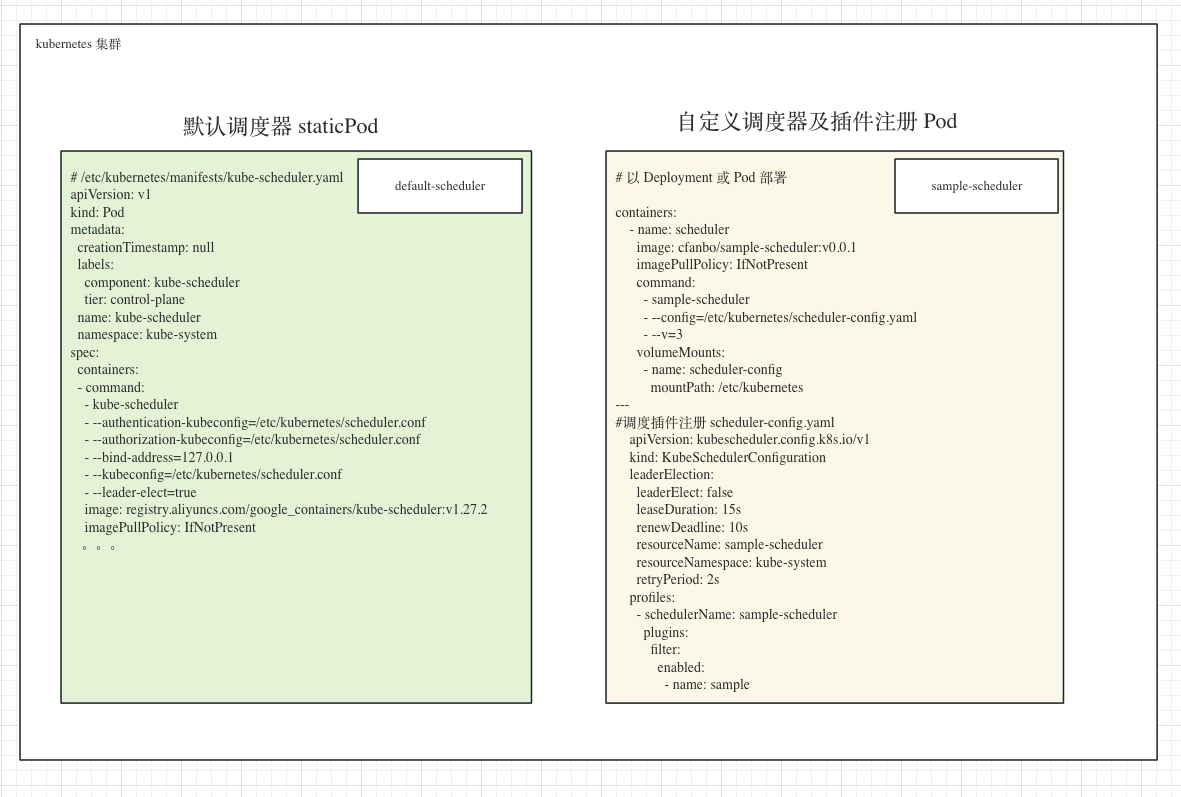

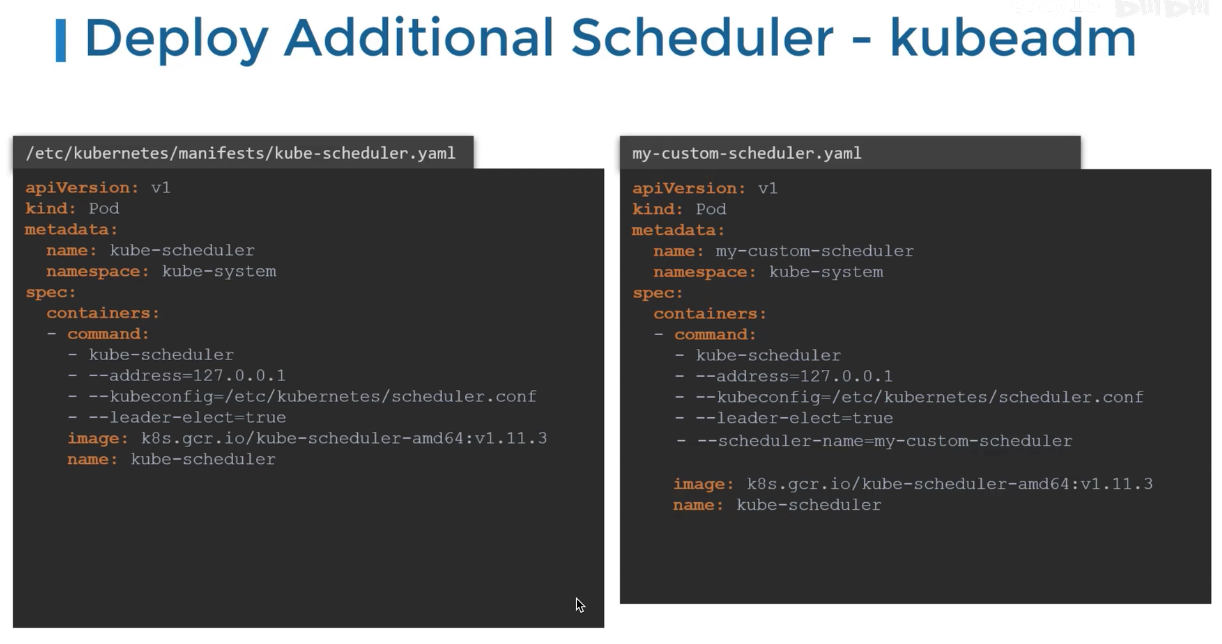

k8s 默认已有一个调度器 default-scheduler, 现在我们自定义一个调度器 sample-scheduler。

对于默认调度器它是以 staticPod 方式部署(左图),其yaml定义文件一般为控制面主机的 /etc/kubernetes/manifests/kube-scheduler.yaml 文件 ,而对于自定义调度器一般以Pod的形式部署(右图)。这样一个集群里可以有多个调度器,然后在编写Pod的时候通过 spec.schedulerName 指定当前Pod使用的调度器。

官方给出了一些插件实现的示例,插件编写参考 https://github.com/kubernetes/kubernetes/tree/v1.27.3/pkg/scheduler/framework/plugins/examples

// pkg/plugin/myplugin.go

package plugin

import (

"context"

"k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/kubernetes/pkg/scheduler/framework"

"log"

)

// Name is the name of the plugin used in the plugin registry and configurations.

const Name = "sample"

// Sort is a plugin that implements QoS class based sorting.

type sample struct{}

var _ framework.FilterPlugin = &sample{}

var _ framework.PreScorePlugin = &sample{}

// New initializes a new plugin and returns it.

func New(_ runtime.Object, _ framework.Handle) (framework.Plugin, error) {

return &sample{}, nil

}

// Name returns name of the plugin.

func (pl *sample) Name() string {

return Name

}

func (pl *sample) Filter(ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeInfo *framework.NodeInfo) *framework.Status {

log.Printf("filter pod: %v, node: %v", pod.Name, nodeInfo)

log.Println(state)

// 排除没有cpu=true标签的节点

if nodeInfo.Node().Labels["cpu"] != "true" {

return framework.NewStatus(framework.Unschedulable, "Node: "+nodeInfo.Node().Name)

}

return framework.NewStatus(framework.Success, "Node: "+nodeInfo.Node().Name)

}

func (pl *sample) PreScore(ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodes []*v1.Node) *framework.Status {

log.Println(nodes)

return framework.NewStatus(framework.Success, "Node: "+pod.Name)

}

实现了Filter 和 PreScore 两类插件,不过本方只演示Filter。

通过 app.NewSchedulerCommand() 注册自定义插件,提供插件的名称和构造函数即可(参考 https://github.com/kubernetes-sigs/scheduler-plugins/blob/master/cmd/scheduler/main.go)。

// cmd/scheduler/main.go 插件调用入口

package main

import (

"os"

"github.com/cfanbo/sample/pkg/plugin"

"k8s.io/kubernetes/cmd/kube-scheduler/app"

)

func main() {

command := app.NewSchedulerCommand(

app.WithPlugin(plugin.Name, plugin.New),

)

if err := command.Execute(); err != nil {

os.Exit(1)

}

}

插件编译

将应用编译成二进制文件(这里是arm64)

➜ GOOS=linux GOARCH=arm64 go build -ldflags '-X k8s.io/component-base/version.gitVersion=$(VERSION) -w' -o bin/sample-scheduler cmd/scheduler/main.go

其命令用法参考 bin/sample-scheduler -h 了解

下面调度器的执行也可以在本机执行,不过由于本机已经有了一个调度器,可能存在一些冲突的情况,这里为了方便直接使用Pod方式进行调度器的部署。

制作镜像

Dockerfile 内容

FROM --platform=$TARGETPLATFORM ubuntu:20.04

WORKDIR .

COPY bin/sample-scheduler /usr/local/bin

CMD ["sample-scheduler"]

制作镜像,参考 https://blog.haohtml.com/archives/31052

在生产中要尽量使用体积最小的基础镜像,这里为了方便直接使用了 ubuntu:20.04 镜像,镜像大小有些大

docker buildx build --platform linux/arm64 -t cfanbo/sample-scheduler:v0.0.1

将生成的镜像上传到远程仓库,以便后面将通过Pod进行部署。

docker push cfanbo/sample-scheduler:v0.0.1

这里环境为 arm64 架构

插件部署

插件功能开发完后,剩下就是如何部署的问题了。

要想让插件运行,必须先将插件在调度框架中进行注册,这个操作是通过编写KubeSchedulerConfiguration 配置文件来定制 kube-scheduler 的操作实现的。

本文我们将调度器插件以Pod 的方式运行。

插件在容器里运行时,需要指定一个调度器配置文件,这个文件内容是一个 KubeSchedulerConfiguration 对象,而这个对象内容我们可以通过 volume 这种方式挂载到容器里,插件运行时指定这个配置文件就可可以了。

首先创建一个 ConfigMap 对象,其内容就是我们需要的 KubeSchedulerConfiguration 配置,在容器里再通过首先通过 volume 来挂载到本地目录,最后通过 --config 来指定这个配置文件。

这里整个配置通过一个yaml 文件实现了,很方便。

# sample-scheduler.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: sample-scheduler-clusterrole

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- create

- get

- list

- apiGroups:

- ""

resources:

- endpoints

- events

verbs:

- create

- get

- update

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- pods

verbs:

- delete

- get

- list

- watch

- update

- apiGroups:

- ""

resources:

- bindings

- pods/binding

verbs:

- create

- apiGroups:

- ""

resources:

- pods/status

verbs:

- patch

- update

- apiGroups:

- ""

resources:

- replicationcontrollers

- services

verbs:

- get

- list

- watch

- apiGroups:

- apps

- extensions

resources:

- replicasets

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- persistentvolumeclaims

- persistentvolumes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- list

- watch

- apiGroups:

- "storage.k8s.io"

resources:

- storageclasses

- csinodes

- csistoragecapacities

- csidrivers

verbs:

- get

- list

- watch

- apiGroups:

- "coordination.k8s.io"

resources:

- leases

verbs:

- create

- get

- list

- update

- apiGroups:

- "events.k8s.io"

resources:

- events

verbs:

- create

- patch

- update

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: sample-scheduler-sa

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: sample-scheduler-clusterrolebinding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: sample-scheduler-clusterrole

subjects:

- kind: ServiceAccount

name: sample-scheduler-sa

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: scheduler-config

namespace: kube-system

data:

scheduler-config.yaml: |

apiVersion: kubescheduler.config.k8s.io/v1

kind: KubeSchedulerConfiguration

leaderElection:

leaderElect: false

leaseDuration: 15s

renewDeadline: 10s

resourceName: sample-scheduler

resourceNamespace: kube-system

retryPeriod: 2s

profiles:

- schedulerName: sample-scheduler

plugins:

filter:

enabled:

- name: sample

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-scheduler

namespace: kube-system

labels:

component: sample-scheduler

spec:

selector:

matchLabels:

component: sample-scheduler

template:

metadata:

labels:

component: sample-scheduler

spec:

serviceAccountName: sample-scheduler-sa

priorityClassName: system-cluster-critical

volumes:

- name: scheduler-config

configMap:

name: scheduler-config

containers:

- name: scheduler

image: cfanbo/sample-scheduler:v0.0.1

imagePullPolicy: IfNotPresent

command:

- sample-scheduler

- --config=/etc/kubernetes/scheduler-config.yaml

- --v=3

volumeMounts:

- name: scheduler-config

mountPath: /etc/kubernetes

这个yaml 文件共完成以下几件事:

- 通过

ConfigMap声明一个KubeSchedulerConfiguration配置 - 创建一个

Deployment对象,其中容器镜像cfanbo/sample-scheduler:v0.0.1是前面我们开发的插件应用,对于调度器插件配置通过volume的方式存储到容器里/etc/kubernetes/scheduler-config.yaml,应用启动时指定此配置文件;这里为了调试方便指定了日志--v=3等级 - 创建一个

ClusterRole,指定不同资源的访问权限 - 创建一个

ServiceAccount - 声明一个

ClusterRoleBinding对象,绑定ClusterRole和ServiceAccount两者的关系

安装插件

➜ kubectl apply -f sample-scheduler.yaml

此时插件以pod的形式运行(命令空间为 kube-system )。

➜ kubectl get pod -n kube-system --selector=component=sample-scheduler

NAME READY STATUS RESTARTS AGE

sample-scheduler-85cd75d775-jq4c7 1/1 Running 0 5m50s

查看pod 容器进程启动参数

➜ kubectl exec -it -n kube-system pod/sample-scheduler-85cd75d775-jq4c7 -- ps -auxww

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 4.9 0.6 764160 55128 ? Ssl 07:41 0:05 sample-scheduler --config=/etc/kubernetes/scheduler-config.yaml --v=3

root 39 0.0 0.0 5472 2284 pts/0 Rs+ 07:43 0:00 ps -auxww

可以看到插件进程,同时指定了两个参数。

新开一个终端,持续观察调度器插件的输出日志

➜ kubectl logs -n kube-system -f sample-scheduler-85cd75d775-jq4c7

插件测试

正常情况下,这时Pod是无法被正常调度的,因为我们插件对这个调度行为进行了干预。

无法调度

创建一个pod,并指定调度器为 sample-scheduler

# test-scheduler.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-scheduler

spec:

selector:

matchLabels:

app: test-scheduler

template:

metadata:

labels:

app: test-scheduler

spec:

schedulerName: sample-scheduler # 指定使用的调度器,不指定使用默认的default-scheduler

containers:

- image: nginx:1.23-alpine

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80

➜ kubectl apply -f test-scheduler.yaml

由于插件主要实现对节点筛选,排除那些不能运行该 Pod 的节点,运行时将检查节点是否存在 cpu=true 标签,如果不存在这个label标签,则说明此节点无法通过预选阶段,后面的调度与绑定步骤就不可能执行。

我们看一下这个Pod能否被调度成功

➜ kubectl get pods --selector=app=test-scheduler

NAME READY STATUS RESTARTS AGE

test-scheduler-78c89768cf-5d9ct 0/1 Pending 0 11m

可以看到一直处于 Pending 状态,说明一直无法被调度,我们再看一下这个Pod描述信息

➜ kubectl describe pod test-scheduler-78c89768cf-5d9ct

Name: test-scheduler-78c89768cf-5d9ct

Namespace: default

Priority: 0

Service Account: default

Node: <none>

Labels: app=test-scheduler

pod-template-hash=78c89768cf

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/test-scheduler-78c89768cf-5d9ct

Containers:

nginx:

Image: nginx:1.23-alpine

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-gptlh (ro)

Volumes:

kube-api-access-gptlh:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

此时 Node 和 Event 字段均为空。

为什么这样呢,是不是我们插件发挥作用了呢?我们看下调度器插件日志

# 收到一个刚刚创建的 Pod 日志

I0731 09:14:44.663628 1 eventhandlers.go:118] "Add event for unscheduled pod" pod="default/test-scheduler-78c89768cf-5d9ct"

# 现在开始调度 Pod

I0731 09:14:44.663787 1 schedule_one.go:80] "Attempting to schedule pod" pod="default/test-scheduler-78c89768cf-5d9ct"

# 这里是我们程序里的调试日志,正好对应两个 log.Println()

2023/07/31 09:14:44 filter pod: test-scheduler-78c89768cf-5d9ct, node: &NodeInfo{Pods:[calico-apiserver-f654d8896-c97v9 calico-node-94q9p csi-node-driver-7bjxx nginx-984448cf6-45nrp nginx-984448cf6-zx67q ingress-nginx-controller-8c4c57cd9-n4lvm coredns-7bdc4cb885-m9sbg coredns-7bdc4cb885-npz8p etcd-k8s kube-apiserver-k8s kube-controller-manager-k8s kube-proxy-fxz6j kube-scheduler-k8s controller-7948676b95-b2zfd speaker-p779d minio-operator-67c694f5f6-b4s7h], RequestedResource:&framework.Resource{MilliCPU:1150, Memory:614465536, EphemeralStorage:524288000, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, NonZeroRequest: &framework.Resource{MilliCPU:2050, Memory:3131047936, EphemeralStorage:0, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, UsedPort: framework.HostPortInfo{"0.0.0.0":map[framework.ProtocolPort]struct {}{framework.ProtocolPort{Protocol:"TCP", Port:7472}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7946}:struct {}{}, framework.ProtocolPort{Protocol:"UDP", Port:7946}:struct {}{}}}, AllocatableResource:&framework.Resource{MilliCPU:4000, Memory:8081461248, EphemeralStorage:907082144291, AllowedPodNumber:110, ScalarResources:map[v1.ResourceName]int64{"hugepages-1Gi":0, "hugepages-2Mi":0, "hugepages-32Mi":0, "hugepages-64Ki":0}}}

2023/07/31 09:14:44 &{{{0 0} {[] {} 0x40003a8e80} map[PreFilterNodePorts:0x4000192280 PreFilterNodeResourcesFit:0x4000192288 PreFilterPodTopologySpread:0x40001922c8 PreFilterVolumeRestrictions:0x40001922a0 VolumeBinding:0x40001922c0 kubernetes.io/pods-to-activate:0x4000192248] 4} false map[InterPodAffinity:{} NodeAffinity:{} VolumeBinding:{} VolumeZone:{}] map[]}

2023/07/31 09:14:44 filter pod: test-scheduler-78c89768cf-5d9ct, node: &NodeInfo{Pods:[calico-apiserver-f654d8896-flfhb calico-kube-controllers-789dc4c76b-h29t5 calico-node-cgm8v calico-typha-5794d6dbd8-7gz5n csi-node-driver-jsxcz nginx-984448cf6-jcwfs nginx-984448cf6-jcwp2 nginx-984448cf6-r6vmg nginx-deployment-7554c7bd74-s2kfn kube-proxy-hgscf sample-scheduler-85cd75d775-jq4c7 speaker-lvp5l minio console-6bdf84b844-vzg72 minio-operator-67c694f5f6-g2bll tigera-operator-549d4f9bdb-txh45], RequestedResource:&framework.Resource{MilliCPU:200, Memory:268435456, EphemeralStorage:524288000, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, NonZeroRequest: &framework.Resource{MilliCPU:1800, Memory:3623878656, EphemeralStorage:0, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, UsedPort: framework.HostPortInfo{"0.0.0.0":map[framework.ProtocolPort]struct {}{framework.ProtocolPort{Protocol:"TCP", Port:5473}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7472}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7946}:struct {}{}, framework.ProtocolPort{Protocol:"UDP", Port:7946}:struct {}{}}}, AllocatableResource:&framework.Resource{MilliCPU:4000, Memory:8081461248, EphemeralStorage:907082144291, AllowedPodNumber:110, ScalarResources:map[v1.ResourceName]int64{"hugepages-1Gi":0, "hugepages-2Mi":0, "hugepages-32Mi":0, "hugepages-64Ki":0}}}

2023/07/31 09:14:44 &{{{0 0} {[] {} 0x40007be050} map[] 0} false map[InterPodAffinity:{} NodeAffinity:{} VolumeBinding:{} VolumeZone:{}] map[]}

I0731 09:14:44.665942 1 schedule_one.go:867] "Unable to schedule pod; no fit; waiting" pod="default/test-scheduler-78c89768cf-5d9ct" err="0/4 nodes are available: 1 Node: k8s, 1 Node: node1, 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }, 1 node(s) were unschedulable. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.."

# 调度失败结果

2023/07/31 09:14:44.666174 1 schedule_one.go:943] "Updating pod condition" pod="default/test-scheduler-78c89768cf-5d9ct" conditionType=PodScheduled conditionStatus=False conditionReason="Unschedulable"

当前环境共四个节点, 但只有 k8s 和 node1 两个节点可以正常使用。

➜ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s Ready control-plane 65d v1.27.1

node1 Ready <none> 64d v1.27.2

node2 NotReady <none> 49d v1.27.2

node3 NotReady,SchedulingDisabled <none> 44d v1.27.2

从日志输出中可以看到此时插件已经起作用了,这正是我们想要的结果,用来干预Pod的调度行为,目前为止一切符合预期。

恢复调度

下面我们实现让Pod 恢复正常调度的效果,我们给 node1添加一个 cpu=true 标签

➜ kubectl label nodes node1 cpu=true

node/node1 labeled

➜ kubectl get nodes -l=cpu=true

NAME STATUS ROLES AGE VERSION

node1 Ready <none> 64d v1.27.2

再次观察插件日志

# 调度Pod

I0731 09:24:16.616059 1 schedule_one.go:80] "Attempting to schedule pod" pod="default/test-scheduler-78c89768cf-5d9ct"

# 打印日志

2023/07/31 09:24:16 filter pod: test-scheduler-78c89768cf-5d9ct, node: &NodeInfo{Pods:[calico-apiserver-f654d8896-c97v9 calico-node-94q9p csi-node-driver-7bjxx nginx-984448cf6-45nrp nginx-984448cf6-zx67q ingress-nginx-controller-8c4c57cd9-n4lvm coredns-7bdc4cb885-m9sbg coredns-7bdc4cb885-npz8p etcd-k8s kube-apiserver-k8s kube-controller-manager-k8s kube-proxy-fxz6j kube-scheduler-k8s controller-7948676b95-b2zfd speaker-p779d minio-operator-67c694f5f6-b4s7h], RequestedResource:&framework.Resource{MilliCPU:1150, Memory:614465536, EphemeralStorage:524288000, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, NonZeroRequest: &framework.Resource{MilliCPU:2050, Memory:3131047936, EphemeralStorage:0, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, UsedPort: framework.HostPortInfo{"0.0.0.0":map[framework.ProtocolPort]struct {}{framework.ProtocolPort{Protocol:"TCP", Port:7472}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7946}:struct {}{}, framework.ProtocolPort{Protocol:"UDP", Port:7946}:struct {}{}}}, AllocatableResource:&framework.Resource{MilliCPU:4000, Memory:8081461248, EphemeralStorage:907082144291, AllowedPodNumber:110, ScalarResources:map[v1.ResourceName]int64{"hugepages-1Gi":0, "hugepages-2Mi":0, "hugepages-32Mi":0, "hugepages-64Ki":0}}}

2023/07/31 09:24:16 &{{{0 0} {[] {} 0x4000a0ee80} map[PreFilterNodePorts:0x400087c110 PreFilterNodeResourcesFit:0x400087c120 PreFilterPodTopologySpread:0x400087c158 PreFilterVolumeRestrictions:0x400087c130 VolumeBinding:0x400087c148 kubernetes.io/pods-to-activate:0x400087c0d0] 4} true map[InterPodAffinity:{} NodeAffinity:{} VolumeBinding:{} VolumeZone:{}] map[]}

2023/07/31 09:24:16 filter pod: test-scheduler-78c89768cf-5d9ct, node: &NodeInfo{Pods:[calico-apiserver-f654d8896-flfhb calico-kube-controllers-789dc4c76b-h29t5 calico-node-cgm8v calico-typha-5794d6dbd8-7gz5n csi-node-driver-jsxcz nginx-984448cf6-jcwfs nginx-984448cf6-jcwp2 nginx-984448cf6-r6vmg nginx-deployment-7554c7bd74-s2kfn kube-proxy-hgscf sample-scheduler-85cd75d775-jq4c7 speaker-lvp5l minio console-6bdf84b844-vzg72 minio-operator-67c694f5f6-g2bll tigera-operator-549d4f9bdb-txh45], RequestedResource:&framework.Resource{MilliCPU:200, Memory:268435456, EphemeralStorage:524288000, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, NonZeroRequest: &framework.Resource{MilliCPU:1800, Memory:3623878656, EphemeralStorage:0, AllowedPodNumber:0, ScalarResources:map[v1.ResourceName]int64(nil)}, UsedPort: framework.HostPortInfo{"0.0.0.0":map[framework.ProtocolPort]struct {}{framework.ProtocolPort{Protocol:"TCP", Port:5473}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7472}:struct {}{}, framework.ProtocolPort{Protocol:"TCP", Port:7946}:struct {}{}, framework.ProtocolPort{Protocol:"UDP", Port:7946}:struct {}{}}}, AllocatableResource:&framework.Resource{MilliCPU:4000, Memory:8081461248, EphemeralStorage:907082144291, AllowedPodNumber:110, ScalarResources:map[v1.ResourceName]int64{"hugepages-1Gi":0, "hugepages-2Mi":0, "hugepages-32Mi":0, "hugepages-64Ki":0}}}

2023/07/31 09:24:16 &{{{0 0} {[] {} 0x4000a0f2b0} map[] 0} true map[InterPodAffinity:{} NodeAffinity:{} VolumeBinding:{} VolumeZone:{}] map[]}

# 绑定pod与node

I0731 09:24:16.619544 1 default_binder.go:53] "Attempting to bind pod to node" pod="default/test-scheduler-78c89768cf-5d9ct" node="node1"

I0731 09:24:16.640234 1 eventhandlers.go:161] "Delete event for unscheduled pod" pod="default/test-scheduler-78c89768cf-5d9ct"

I0731 09:24:16.642564 1 schedule_one.go:252] "Successfully bound pod to node" pod="default/test-scheduler-78c89768cf-5d9ct" node="node1" evaluatedNodes=4 feasibleNodes=1

I0731 09:24:16.643173 1 eventhandlers.go:186] "Add event for scheduled pod" pod="default/test-scheduler-78c89768cf-5d9ct"

从日志来看,应该是调度成功了,我们再根据pod的状态确认一下

➜ kubectl get pods --selector=app=test-scheduler

NAME READY STATUS RESTARTS AGE

test-scheduler-78c89768cf-5d9ct 1/1 Running 0 10m

此时Pod状态由 Pending 变成了Running ,表示确实调度成功了。

我们再看看此时的Pod描述信息

➜ kubectl describe pod test-scheduler-78c89768cf-5d9ct

Name: test-scheduler-78c89768cf-5d9ct

Namespace: default

Priority: 0

Service Account: default

Node: node1/192.168.0.205

Start Time: Mon, 31 Jul 2023 17:24:16 +0800

Labels: app=test-scheduler

pod-template-hash=78c89768cf

Annotations: cni.projectcalico.org/containerID: 33e3ffc74e4b2fa15cae210c65d3d4be6a8eadc431e7201185ffa1b1a29cc51d

cni.projectcalico.org/podIP: 10.244.166.182/32

cni.projectcalico.org/podIPs: 10.244.166.182/32

Status: Running

IP: 10.244.166.182

IPs:

IP: 10.244.166.182

Controlled By: ReplicaSet/test-scheduler-78c89768cf

Containers:

nginx:

Container ID: docker://97896d4c4fec2bae294d02125562bc29d769911c7e47e5f4020b1de24ce9c367

Image: nginx:1.23-alpine

Image ID: docker://sha256:510900496a6c312a512d8f4ba0c69586e0fbd540955d65869b6010174362c313

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 31 Jul 2023 17:24:18 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-wmh9d (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-wmh9d:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 14m sample-scheduler 0/4 nodes are available: 1 Node: k8s, 1 Node: node1, 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }, 1 node(s) were unschedulable. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling..

Warning FailedScheduling 9m27s sample-scheduler 0/4 nodes are available: 1 Node: k8s, 1 Node: node1, 1 node(s) had untolerated taint {node.kubernetes.io/unreachable: }, 1 node(s) were unschedulable. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling..

Normal Scheduled 5m9s sample-scheduler Successfully assigned default/test-scheduler-78c89768cf-5d9ct to node1

Normal Pulled 5m8s kubelet Container image "nginx:1.23-alpine" already present on machine

Normal Created 5m8s kubelet Created container nginx

Normal Started 5m8s kubelet Started container nginx

从 Event 字段可以看到我们给 node1 添加 label 标签前后事件日志信息。

到此我们整个插件开发工作基本完成了。

总结

当前示例非常的简单,主要是为了让大家方便理解。对于开发什么样的插件,只需要看对应的插件接口就可以了。然后在配置文件里在合适的扩展点启用即可。

对于自定义调度器的实现,是在main.go文件里通过

app.NewSchedulerCommand(

app.WithPlugin(plugin.Name, plugin.New),

)

来注册自定义调度器插件,然后再通过--config 指定插件是否启用以及启用的扩展点。

本文调度器是以Pod 方式运行,并在容器里挂载一个配置 /etc/kubernetes/scheduler-config.yaml ,也可以直接修改集群 kube-scheduler 的配置文件添加一个新调度器配置来实现,不过由于对集群侵入太大了,个人不推荐这种用法。

常见问题

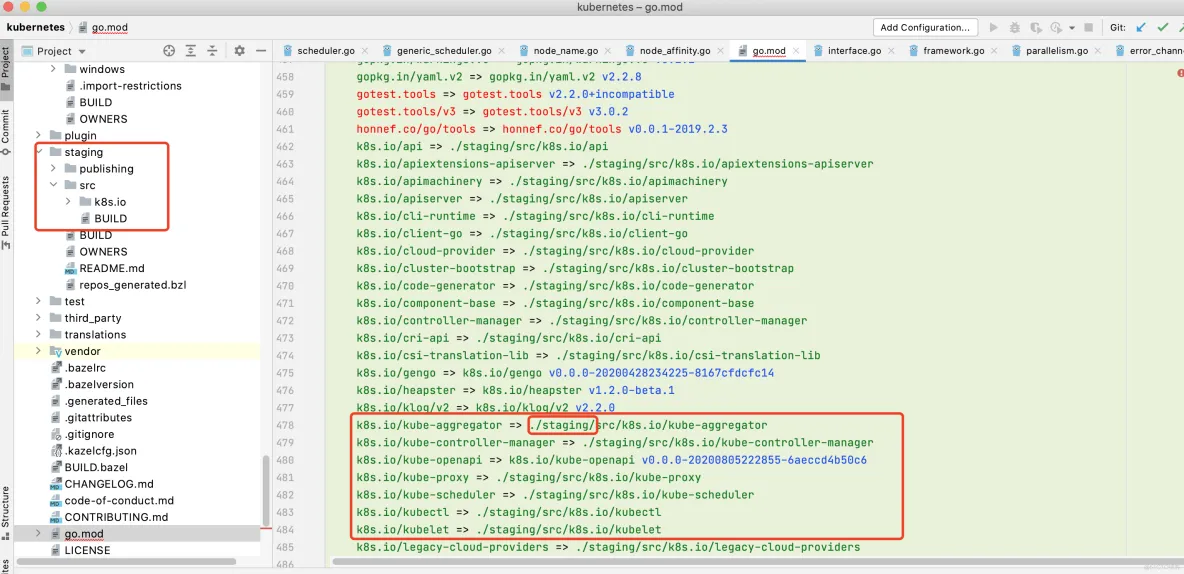

这里关于k8s 依赖的地方,全部使用 replace 方式才运行起来。这里全部replace到k8s源码的staging目录下了。

如何不使用 replace 的话,会提示下载k8s依赖出错

k8s.io/[email protected] requires

// k8s.io/[email protected]: reading https://goproxy.io/k8s.io/api/@v/v0.0.0.mod: 404 Not Found

// server response: not found: k8s.io/[email protected]: invalid version: unknown revision v0.0.0

在这个问题上卡了好久好久,不理解为什么为这样?哪怕指定了版本号也不行

以下是我的 go.mod 内容

module github.com/cfanbo/sample

go 1.20

require (

github.com/spf13/cobra v1.6.0

k8s.io/kubernetes v0.0.0

)

require (

github.com/Azure/go-ansiterm v0.0.0-20210617225240-d185dfc1b5a1 // indirect

github.com/NYTimes/gziphandler v1.1.1 // indirect

github.com/antlr/antlr4/runtime/Go/antlr v1.4.10 // indirect

github.com/asaskevich/govalidator v0.0.0-20190424111038-f61b66f89f4a // indirect

github.com/beorn7/perks v1.0.1 // indirect

github.com/blang/semver/v4 v4.0.0 // indirect

github.com/cenkalti/backoff/v4 v4.1.3 // indirect

github.com/cespare/xxhash/v2 v2.1.2 // indirect

github.com/coreos/go-semver v0.3.0 // indirect

github.com/coreos/go-systemd/v22 v22.4.0 // indirect

github.com/davecgh/go-spew v1.1.1 // indirect

github.com/docker/distribution v2.8.1+incompatible // indirect

github.com/emicklei/go-restful/v3 v3.9.0 // indirect

github.com/evanphx/json-patch v4.12.0+incompatible // indirect

github.com/felixge/httpsnoop v1.0.3 // indirect

github.com/fsnotify/fsnotify v1.6.0 // indirect

github.com/go-logr/logr v1.2.3 // indirect

github.com/go-logr/stdr v1.2.2 // indirect

github.com/go-openapi/jsonpointer v0.19.6 // indirect

github.com/go-openapi/jsonreference v0.20.1 // indirect

github.com/go-openapi/swag v0.22.3 // indirect

github.com/gogo/protobuf v1.3.2 // indirect

github.com/golang/groupcache v0.0.0-20210331224755-41bb18bfe9da // indirect

github.com/golang/protobuf v1.5.3 // indirect

github.com/google/cel-go v0.12.6 // indirect

github.com/google/gnostic v0.5.7-v3refs // indirect

github.com/google/go-cmp v0.5.9 // indirect

github.com/google/gofuzz v1.1.0 // indirect

github.com/google/uuid v1.3.0 // indirect

github.com/grpc-ecosystem/go-grpc-prometheus v1.2.0 // indirect

github.com/grpc-ecosystem/grpc-gateway/v2 v2.7.0 // indirect

github.com/imdario/mergo v0.3.6 // indirect

github.com/inconshreveable/mousetrap v1.0.1 // indirect

github.com/josharian/intern v1.0.0 // indirect

github.com/json-iterator/go v1.1.12 // indirect

github.com/mailru/easyjson v0.7.7 // indirect

github.com/matttproud/golang_protobuf_extensions v1.0.2 // indirect

github.com/mitchellh/mapstructure v1.4.1 // indirect

github.com/moby/sys/mountinfo v0.6.2 // indirect

github.com/moby/term v0.0.0-20221205130635-1aeaba878587 // indirect

github.com/modern-go/concurrent v0.0.0-20180306012644-bacd9c7ef1dd // indirect

github.com/modern-go/reflect2 v1.0.2 // indirect

github.com/munnerz/goautoneg v0.0.0-20191010083416-a7dc8b61c822 // indirect

github.com/opencontainers/go-digest v1.0.0 // indirect

github.com/opencontainers/selinux v1.10.0 // indirect

github.com/pkg/errors v0.9.1 // indirect

github.com/prometheus/client_golang v1.14.0 // indirect

github.com/prometheus/client_model v0.3.0 // indirect

github.com/prometheus/common v0.37.0 // indirect

github.com/prometheus/procfs v0.8.0 // indirect

github.com/spf13/pflag v1.0.5 // indirect

github.com/stoewer/go-strcase v1.2.0 // indirect

go.etcd.io/etcd/api/v3 v3.5.7 // indirect

go.etcd.io/etcd/client/pkg/v3 v3.5.7 // indirect

go.etcd.io/etcd/client/v3 v3.5.7 // indirect

go.opentelemetry.io/contrib/instrumentation/google.golang.org/grpc/otelgrpc v0.35.0 // indirect

go.opentelemetry.io/contrib/instrumentation/net/http/otelhttp v0.35.1 // indirect

go.opentelemetry.io/otel v1.10.0 // indirect

go.opentelemetry.io/otel/exporters/otlp/internal/retry v1.10.0 // indirect

go.opentelemetry.io/otel/exporters/otlp/otlptrace v1.10.0 // indirect

go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc v1.10.0 // indirect

go.opentelemetry.io/otel/metric v0.31.0 // indirect

go.opentelemetry.io/otel/sdk v1.10.0 // indirect

go.opentelemetry.io/otel/trace v1.10.0 // indirect

go.opentelemetry.io/proto/otlp v0.19.0 // indirect

go.uber.org/atomic v1.7.0 // indirect

go.uber.org/multierr v1.6.0 // indirect

go.uber.org/zap v1.19.0 // indirect

golang.org/x/crypto v0.1.0 // indirect

golang.org/x/net v0.8.0 // indirect

golang.org/x/oauth2 v0.0.0-20220223155221-ee480838109b // indirect

golang.org/x/sync v0.1.0 // indirect

golang.org/x/sys v0.6.0 // indirect

golang.org/x/term v0.6.0 // indirect

golang.org/x/text v0.8.0 // indirect

golang.org/x/time v0.0.0-20220210224613-90d013bbcef8 // indirect

google.golang.org/appengine v1.6.7 // indirect

google.golang.org/genproto v0.0.0-20220502173005-c8bf987b8c21 // indirect

google.golang.org/grpc v1.51.0 // indirect

google.golang.org/protobuf v1.28.1 // indirect

gopkg.in/inf.v0 v0.9.1 // indirect

gopkg.in/natefinch/lumberjack.v2 v2.0.0 // indirect

gopkg.in/yaml.v2 v2.4.0 // indirect

gopkg.in/yaml.v3 v3.0.1 // indirect

k8s.io/api v0.0.0 // indirect

k8s.io/apimachinery v0.0.0 // indirect

k8s.io/apiserver v0.0.0 // indirect

k8s.io/client-go v0.0.0 // indirect

k8s.io/cloud-provider v0.0.0 // indirect

k8s.io/component-base v0.0.0 // indirect

k8s.io/component-helpers v0.0.0 // indirect

k8s.io/controller-manager v0.0.0 // indirect

k8s.io/csi-translation-lib v0.0.0 // indirect

k8s.io/dynamic-resource-allocation v0.0.0 // indirect

k8s.io/klog/v2 v2.90.1 // indirect

k8s.io/kms v0.0.0 // indirect

k8s.io/kube-openapi v0.0.0-20230501164219-8b0f38b5fd1f // indirect

k8s.io/kube-scheduler v0.0.0 // indirect

k8s.io/kubelet v0.0.0 // indirect

k8s.io/mount-utils v0.0.0 // indirect

k8s.io/utils v0.0.0-20230209194617-a36077c30491 // indirect

sigs.k8s.io/apiserver-network-proxy/konnectivity-client v0.1.2 // indirect

sigs.k8s.io/json v0.0.0-20221116044647-bc3834ca7abd // indirect

sigs.k8s.io/structured-merge-diff/v4 v4.2.3 // indirect

sigs.k8s.io/yaml v1.3.0 // indirect

)

// 使用本地的 k8s 源码路径替换

replace (

k8s.io/api => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/api

k8s.io/apiextensions-apiserver => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/apiextensions-apiserver

k8s.io/apimachinery => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/apimachinery

k8s.io/apiserver => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/apiserver

k8s.io/cli-runtime => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/cli-runtime

k8s.io/client-go => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/client-go

k8s.io/cloud-provider => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/cloud-provider

k8s.io/cluster-bootstrap => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/cluster-bootstrap

k8s.io/code-generator => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/code-generator

k8s.io/component-base => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/component-base

k8s.io/component-helpers => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/component-helpers

k8s.io/controller-manager => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/controller-manager

k8s.io/cri-api => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/cri-api

k8s.io/csi-translation-lib => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/csi-translation-lib

k8s.io/dynamic-resource-allocation => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/dynamic-resource-allocation

k8s.io/kms => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kms

k8s.io/kube-aggregator => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kube-aggregator

k8s.io/kube-controller-manager => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kube-controller-manager

k8s.io/kube-proxy => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kube-proxy

k8s.io/kube-scheduler => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kube-scheduler

k8s.io/kubectl => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kubectl

k8s.io/kubelet => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/kubelet

k8s.io/kubernetes => /Users/sxf/workspace/kubernetes

k8s.io/legacy-cloud-providers => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/legacy-cloud-providers

k8s.io/metrics => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/metrics

k8s.io/mount-utils => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/mount-utils

k8s.io/pod-security-admission => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/pod-security-admission

k8s.io/sample-apiserver => /Users/sxf/workspace/kubernetes/staging/src/k8s.io/sample-apiserver

)

这里有些依赖是没有用的,真正开发的时候直接使用 go mod tidy 清理一下即可。

扩展阅读: 调度器扩展

参考资料

- https://github.com/kubernetes-sigs/scheduler-plugins

- https://github.com/kubernetes-sigs/scheduler-plugins/blob/master/doc/install.md

- https://github.com/kubernetes-sigs/scheduler-plugins/blob/master/doc/develop.md

- https://github.com/kubernetes-sigs/scheduler-plugins/blob/master/cmd/scheduler/main.go

- https://kubernetes.io/zh-cn/docs/concepts/scheduling-eviction/scheduling-framework/

- https://kubernetes.io/zh-cn/docs/reference/scheduling/config/

- https://www.qikqiak.com/post/custom-kube-scheduler/

- https://github.com/kubernetes/enhancements/tree/master/keps/sig-scheduling/624-scheduling-framework#custom-scheduler-plugins-out-of-tree

- https://www.xiexianbin.cn/kubernetes/scheduler/creating-a-k8s-scheduler-plugin/index.html

- https://mp.weixin.qq.com/s/FGzwDsrjCNesiNbYc3kLcA

- https://github.com/kelseyhightower/kubernetes-the-hard-way/blob/master/docs/08-bootstrapping-kubernetes-controllers.md#configure-the-kubernetes-scheduler

- https://developer.ibm.com/articles/creating-a-custom-kube-scheduler/

- https://www.bilibili.com/video/BV1tT4y1o77z/